Tutorials

I. Visual Content Generation in the Era of Large Foundation Models

Presenters:

- Leigang Qu

- National University of Singapore, Singapore

- Email: leigangqu@gmail.com

- Fei Shen

- Nanjing University of Science and Technology, Nanjing, China

- Email: feishen@njust.edu.cn

- Zhenglin Zhou

- Zhejiang University, Hangzhou, China

- Email: zhenglinzhou@zju.edu.cn

- Jiayi Lyu

- University of Chinese Academy of Sciences, Beijing, China

- Email: lyujiayi21@mails.ucas.ac.cn

- Wenjie Wang

- University of Science and Technology of China, Hefei, China

- Email: wenjiewang96@gmail.com

- Lu Jiang

- ByteDance, San Jose, USA

- Email: lu.jiang@bytedance.com

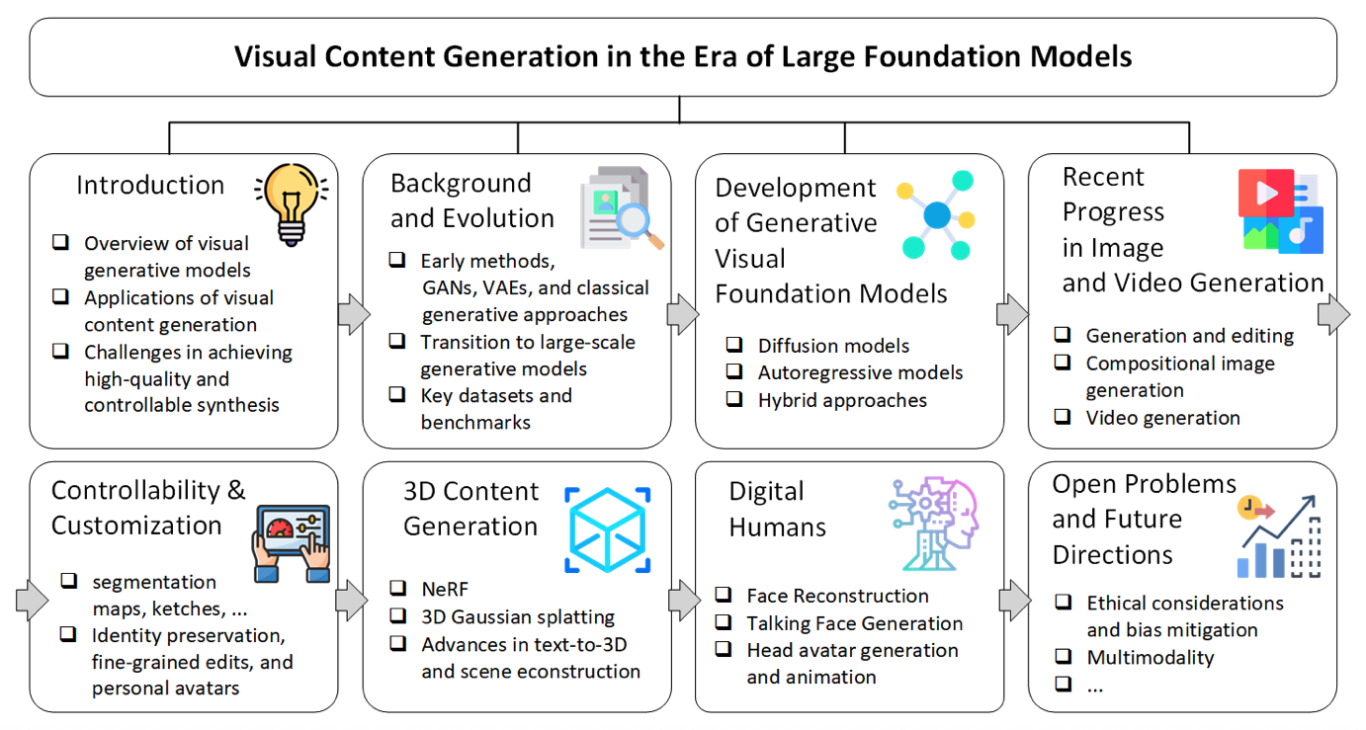

Short Description:

The rapid advancements in large foundation models have significantly transformed the field of visual content generation, impacting domains such as image synthesis, video generation, and 3D modeling. This tutorial will provide an in-depth exploration of the state-of-the-art techniques and methodologies used in visual content generation, emphasizing the role of large-scale generative models. The tutorial will cover fundamental principles, model architectures, recent breakthroughs, and practical applications. We will discuss various generative paradigms, including diffusion models, autoregressive models, and large multimodal models, highlighting their strengths and limitations. Additionally, we will delve into the challenges of controllability, personalization, and realism in generated content, along with open research problems and future directions. By the end of the tutorial, attendees will gain a comprehensive understanding of contemporary visual content generation techniques and their applications, equipping them with the knowledge to leverage these models in their research and projects.

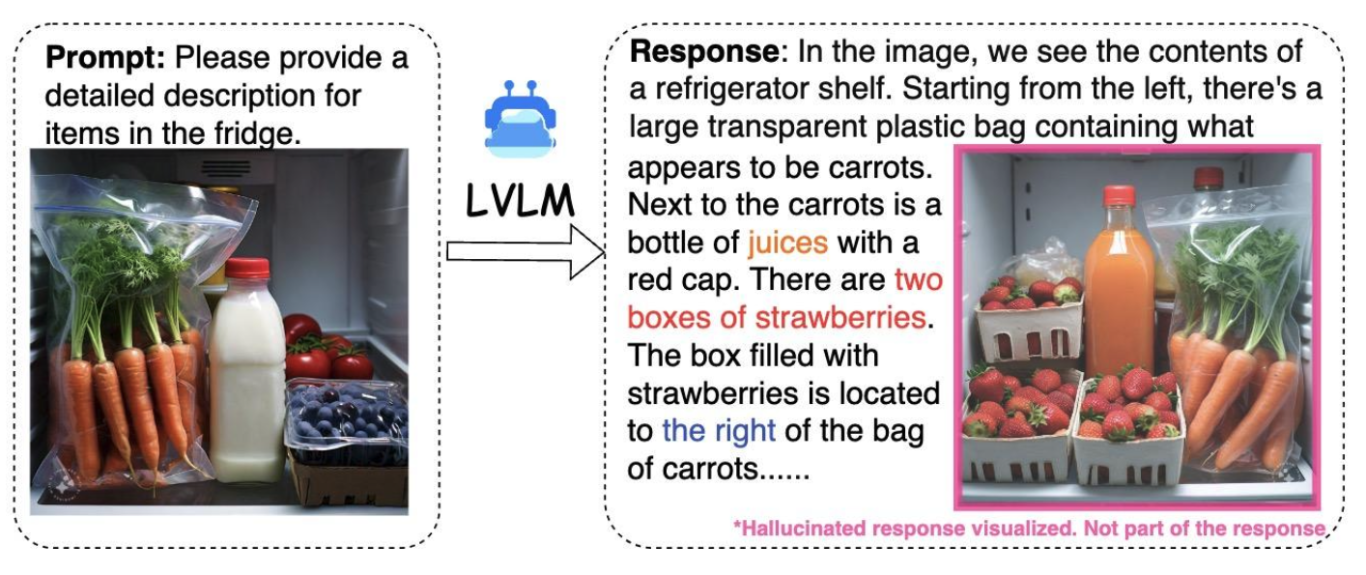

II. Hallucinations in Large Language Models and Large Vision-Language Models

Presenters:

- Liqiang Jing

- University of Texas at Dallas, USA

- Email: jingliqiang6@gmail.com

- Yue Zhang

- University of Texas at Dallas, USA

- Email: yue.zhang@utdallas.edu

- Xinya Du

- University of Texas at Dallas, USA

- Email: xinya.du@utdallas.edu

Short Description:

Multimodal Large Language Models (MLLMs), Large Vision-Language Models (LVLMs), and Large Language Models (LLMs) have demonstrated remarkable capabilities across various tasks, including text-based reasoning and multimodal content generation. However, these models frequently generate hallucinations—factually incorrect or misleading content—that pose significant challenges, particularly in high-stakes domains such as healthcare, law, and finance. This tutorial provides a comprehensive exploration of hallucinations in MLLMs, LVLMs, and LLMs, examining their causes, detection methods, and mitigation strategies. We discuss different types of hallucination evaluation and benchmarking and explore state-of-the-art techniques for hallucination mitigation.